NVIDIA’s Blackwell AI chip to cost between $30,000 to $40,000

According to a report by CNBC, NVIDIA has spent over $10 billion on research and development of the Blackwell chip, which outperforms all the other AI chips in the market by a huge margin.

| GTC 2024: From Blackwell AI to 6G Research, NVIDIA’s big announcements

The new Blackwell AI chip, currently high in demand, is slightly more expensive than NVIDIA’s H100 Hopper AI processor, which is said to cost $25,000 to $40,000, introduced back in 2022. This price includes not just the chip but also the cost of integrating the chip into the data centre.

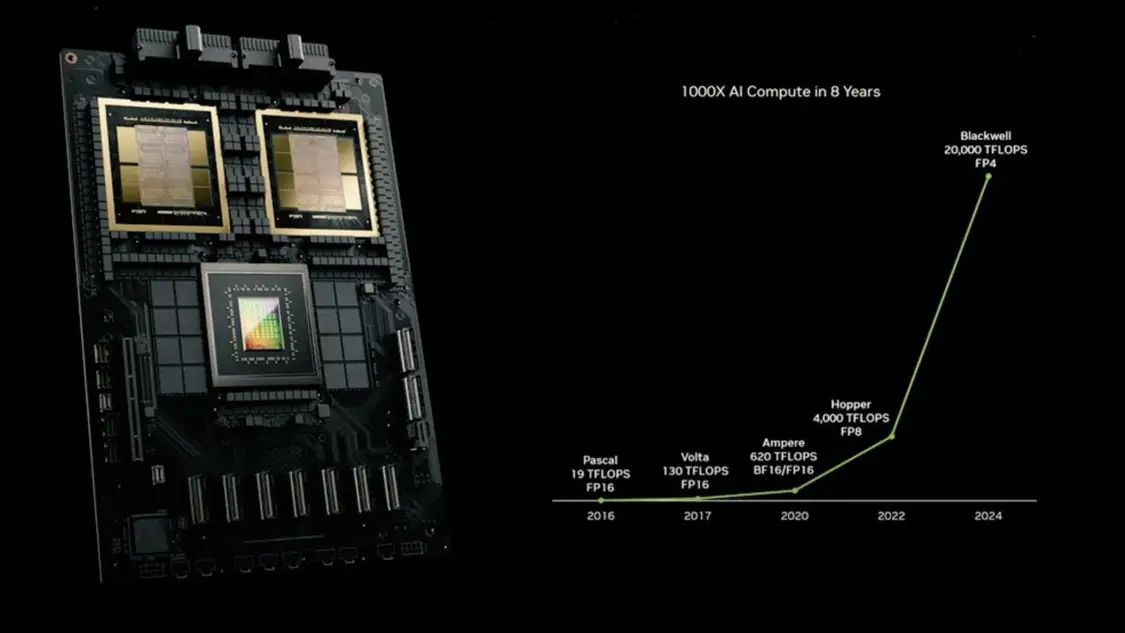

Compared to the H100, the B200 is not only more powerful but also more power-efficient. NVIDIA announced three different variants of the latest AI accelerators on Monday — B100, a B200, and a GB200, which combines two Blackwell chips with an ARM-based CPU. Blackwell is four times faster than Hopper, and the B200 has a whopping 288GB of HBM3e memory, meant for AI workloads, cloud, and data centres.

| Why the B200 Blackwell chip will consolidate Nvidia’s stranglehold over the AI market

In terms of benchmark numbers, the B200 offers 7 times more performance than the H100 on the GPT-3 benchmark, and 2000 Blackwell chips could train a model like GPT-4 with over 1.8T parameters in just 90 days.

| Nvidia unveils flagship AI chip, the B200, aiming to extend dominance

A single Blackwell AI processor can offer up to 20 petaflops of FP4 horsepower and consists of 208 billion transistors. The GB200, which combines two Blackwell AI processors into one, can deliver real-world AI performance for 30x LLM inference workload performance with 25 per cent more power efficiency than the H100.